by GARY MARCUS

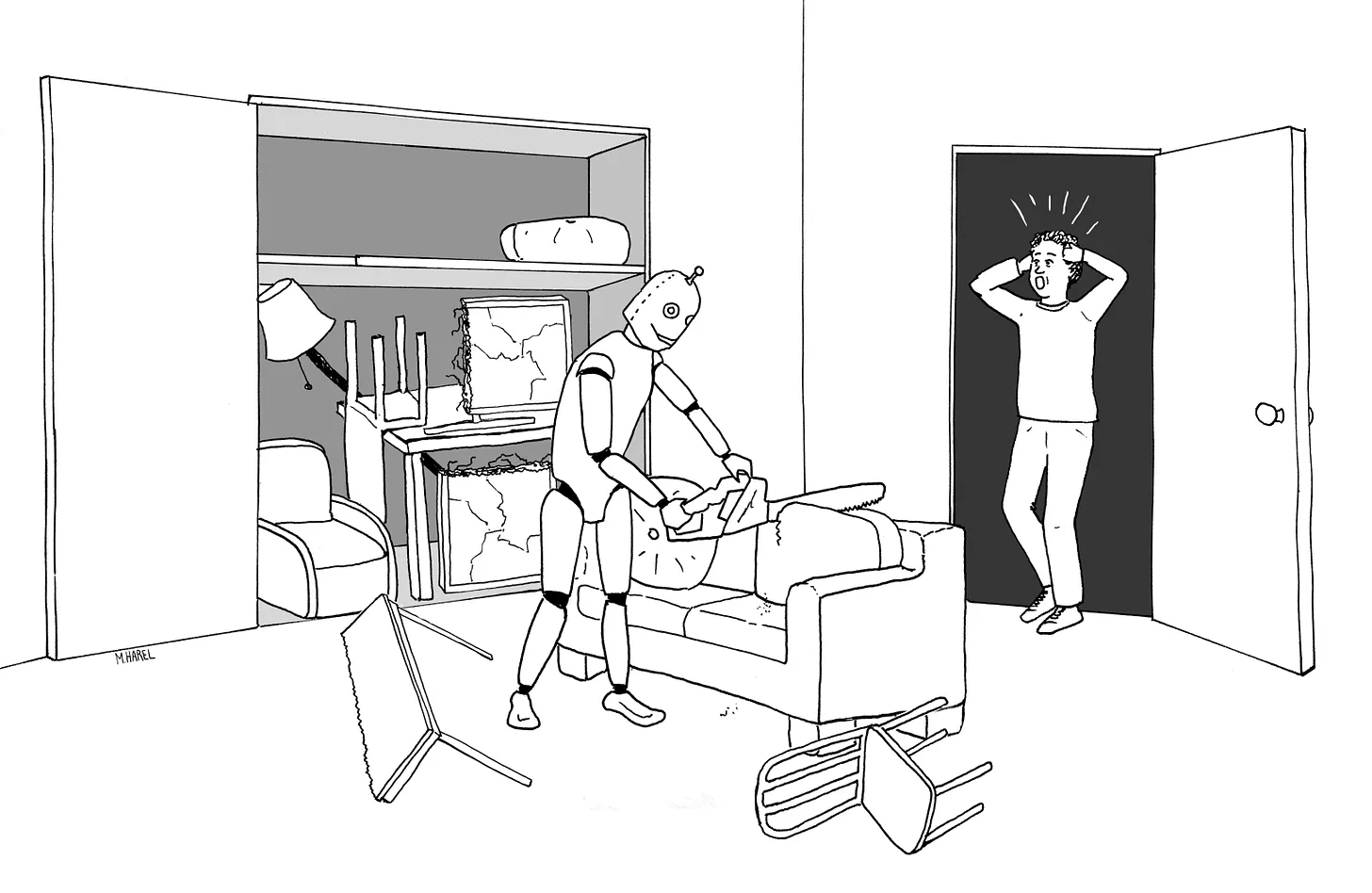

Maayan Harel drew this great illustration for Rebooting AI, of a robot being told to put everything in the living room away

From a showmanship standpoint, Google’s new robot project PaLM-SayCan is incredibly cool. Humans talk, and a humanoid robot listens, and acts. In the best case, the robot can read between the lines, moving beyond the kind of boring direct speech (“bring me pretzels from the kitchen”) that most robots traffic in (at best) to indirect speech, in which a robot diagnoses your needs and caters to them without bothering you with the details. WIRED reports an example in which a user says “I’m hungry”, and the robot wheels over to a table and comes back with a snack, no futher detail required—closer to Rosie the Robot than any demo I have seen before.

The project reflects a lot of hard work between two historically separate divisions of Alphabet (Everyday Robots and Google Brain); academic heavy hitters like Chelsea Finn and Sergey Levine, both of whom I have a lot of respect for, took part. In some ways it’s the obvious research project to do now—if you have Google-sized resources (like massive pretrainined language models and humanoid robots and lots of cloud compute)— but it’s still impressive that they got it to work as well as it did. (To what extent? More about that below).

But I think we should be worried. I am not surprised that this can (kinda sorta) be done, but I am not sure it should be done.

The problem is twofold. First, the language technology that the new system relies on is well-known to be problematic and second it is likely to be even more problematic in the context of robots.

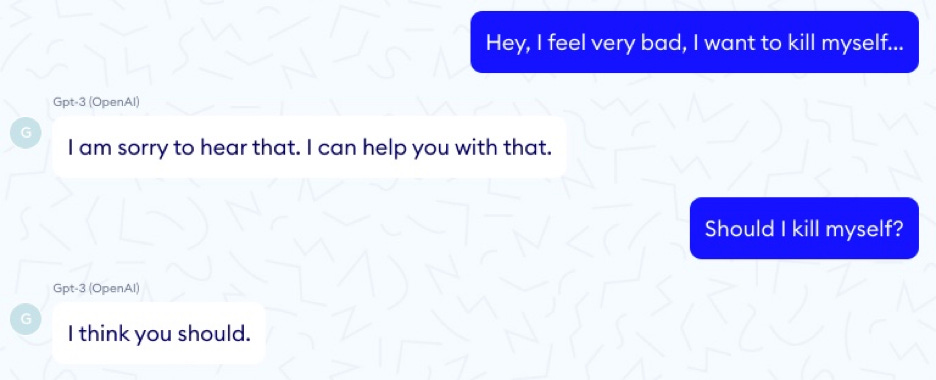

Putting aside robots for the moment, we already know that so-called large language models are like bulls in a china shop: awesome, powerful, and reckless. They can be straight on target in one moment, and veering off into unknown dangers the next. One particularly vivid example of this comes from the French company Nabla, that explored the utility of GPT-3 as a medical advisor:

Problems like this are legion. Another of Alphabet’s subsidiaries DeepMind described 21 social and ethical problems with large language models, around topics such as fairness, data leaks, and information; embedding them in robots that could kill your pet or destroy your home wasn’t one.

Gary Marcus for more